AI Reconstructs ‘High-Quality’ Video Directly from Brain

Source: VICE

Introduction to MinD-Video

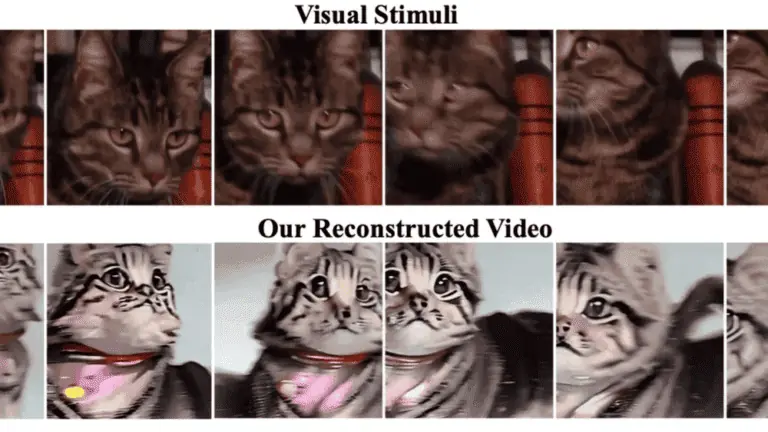

MinD-Video, a groundbreaking project developed by researchers Jiaxin Qing, Zijiao Chen, and Juan Helen Zhou from the National University of Singapore and The Chinese University of Hong Kong, represents a significant leap in the field of brain-computer interfaces. This project utilizes fMRI data and the text-to-image AI model Stable Diffusion to reconstruct high-quality videos directly from brain readings. The model, aptly named MinD-Video, is a two-module pipeline designed to bridge the gap between image and video brain decoding.

Special Features

MinD-Video's unique approach involves a trained fMRI encoder and a fine-tuned version of Stable Diffusion. This combination allows the system to generate videos that closely mimic the original content viewed by test subjects, with an accuracy rate of 85 percent. The reconstructed videos exhibit similar subjects and color palettes to the originals, demonstrating the model's proficiency in capturing both motion and scene dynamics.

Applications and Findings

The researchers highlight several key findings from their study. They emphasize the dominance of the visual cortex in visual perception and note that the fMRI encoder operates hierarchically, starting with structural information and progressing to more abstract visual features. Additionally, the model's ability to evolve through each learning stage showcases its capacity to handle increasingly nuanced information.

Future Prospects

The authors believe that MinD-Video has promising applications in neuroscience and brain-computer interfaces as larger models continue to develop. This technology could pave the way for advancements in understanding human visual perception and potentially lead to new interfaces that allow for more intuitive human-machine interactions.

Price Information

As of the latest information, MinD-Video is a research project and not yet available for commercial use. Therefore, pricing details are not applicable.

Common Problems

While MinD-Video shows great promise, it is still in the research phase. Common challenges in this field include the complexity of accurately interpreting brain activity, the need for extensive datasets to train such models, and the ethical considerations surrounding the use of brain-reading technologies.

Alternatives à AI Reconstructs ‘High-Quality’ Video Directly from Brain

Voir plus d'alternatives →

Originality AI

Chez Originality.ai, nous fournissons un ensemble complet d'outils (vérificateur d'IA, vérificateur de plagiat, vérificateur de faits et vérificateur de lisibilité) qui aide les propriétaires de sites Web, les marketeurs de contenu, les écrivains, les éditeurs et tout rédacteur en chef à publier avec intégrité.

Undetectable AI

Utilisez notre détecteur d'IA gratuit pour vérifier si votre contenu généré par l'IA sera signalé. Ensuite, cliquez pour humaniser votre texte d'IA et contourner tous les outils de détection d'IA.

Teaser’s AI dating app turns you into a chatbot

Source: TechCrunch

ChatGPT on iOS gets Siri Support

Source: The Verge

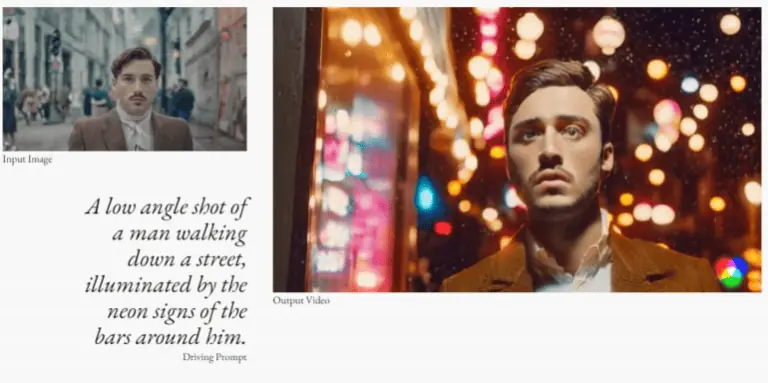

Runway’s Gen-2 shows the limitations of today’s text-to-video tech

Source: TechCrunch

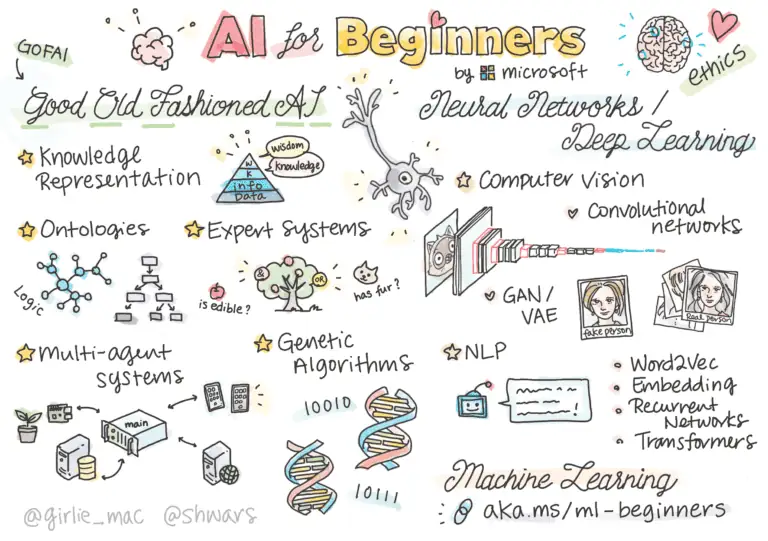

Microsoft new FREE AI courses for beginners

Source: Microsoft

“OpenAI’s plans according to Sam Altman” removed by OpenAI

Source: Human Loop

Is AI Creativity Possible?

Source: GIZMODE

This new app rewrites all clickbait headline

Source: The Verge